Mechatronics and Robotics

Autonomous Robotics Competition Vehicle

As part of Team Apollo in CU Boulder’s graduate-level robotics course, I helped develop a camera-based autonomous vehicle using a modified AWS DeepRacer platform. The project required full system integration including hardware tuning, ROS2 software development, and computer vision for navigation, speed zone detection, stop sign compliance, and real-time telemetry. After pivoting from a SLAM-based architecture due to hardware failure, our team engineered a robust OpenCV-based vision pipeline and completed all required challenge features. The final system achieved an average lap time of 2:08, with consistent performance and real-time visualization of vehicle state.

With LiDAR damaged early in the project, we pivoted entirely to camera-based control using OpenCV. I contributed to adapting prior cone-detection code into a reliable line-following algorithm by tuning HSV color ranges, contour detection, and implementing a full PID controller for stable steering. Our pipeline used a Raspberry Pi camera mounted for optimal horizon coverage and leveraged ROS2 nodes to process image data and command motor/servo outputs. For challenge features, I worked on tuning detection thresholds for stop signs and speed limit signs. Each sign was identified by color segmentation; red slowed or stopped the vehicle, and green restored speed. Lighting conditions, contour variability, and tuning PID parameters posed significant challenges, but through iterative testing, we achieved over 80% reliability on feature detection.

In addition to software contributions, I worked on the physical assembly and testing of the DeepRacer platform, debugging several critical hardware issues that emerged during the semester. I assisted in restacking and reprinting structural components after a crash, identified problems with the fan clearance that caused thermal shutdowns, and adapted the Raspberry Pi’s mounting with custom 3D-printed spacers. On the electronics side, I supported battery diagnostics when a lockout occurred near project close, ensuring we could continue testing by sourcing and validating new power systems. Across development, I helped test performance in real-world conditions, contributing to final integration and tuning that enabled a clean, competitive demo run.

Wall‑E Inspired Autonomous Pet Robot

As part of CU Boulder's Mechatronics and Robotics II course, I co-developed a fully integrated autonomous robot inspired by Pixar’s Wall-E. Our team designed, fabricated, and programmed a platform that combined computer vision, voice interaction, a 4-DOF robotic arm, expressive animated LCDs, and a functional internal compartment; each subsystem working in concert under a unified ROS-based architecture. My role focused on mechanical integration, actuator control, and electrical system design, culminating in a robot capable of navigating, responding to commands, interacting with physical objects, and expressing human-like emotion through both motion and display. The result was a feature-rich, personality-driven robot that performed reliably on demo day and offered a clear vision for future expansion.

Mechanically, I contributed to the development of Wall-E’s drive system, internal compartment, expressive head assembly, and robotic arm; all of which required tight integration of 3D-printed parts, servos, DC motors, and sensors. I helped design the compartment mechanism using a string-and-spool DC motor with dual limit switches for controlled door motion and a servo-driven tilt plate to offload stored items. In the head assembly, we engineered a servo-actuated socket system and mounted circular LCDs on custom-designed PLA housings to deliver reactive facial expressions. All components were driven through a custom PCB I helped layout in Fusion 360 and wire using JST connectors. We powered the system with an 11.1V LiPo battery and buck converters, distributing voltage cleanly across the Pi, servos, and display components. The most rewarding challenge was solving layout and mechanical interferences that emerged during physical assembly requiring rapid iteration and close collaboration between electrical and mechanical subsystems.

Software-wise, we implemented autonomous mobility, voice recognition, gesture recognition, and modular command handling. The movement system used a RealSense camera to avoid obstacles while randomly exploring its environment, and voice commands such as “wave,” “treat,” and “vomit” triggered scripted state machines that actuated the arm and compartment. I worked on servo control mapping and function toggling to ensure smooth transitions between motion states—particularly in the robotic arm, where clearance and joint limitations required careful pre-defined poses rather than full inverse kinematics. Our final demo showcased a robot that could pick up a ball, stow it, emote, and react to user commands—all running on a Raspberry Pi-based control system with modular expansion potential. Despite some integration setbacks with camera-based vision, the result was an animated, expressive autonomous system that captured the charm and personality of the original Wall-E.

Projectile Launching Competition Robot

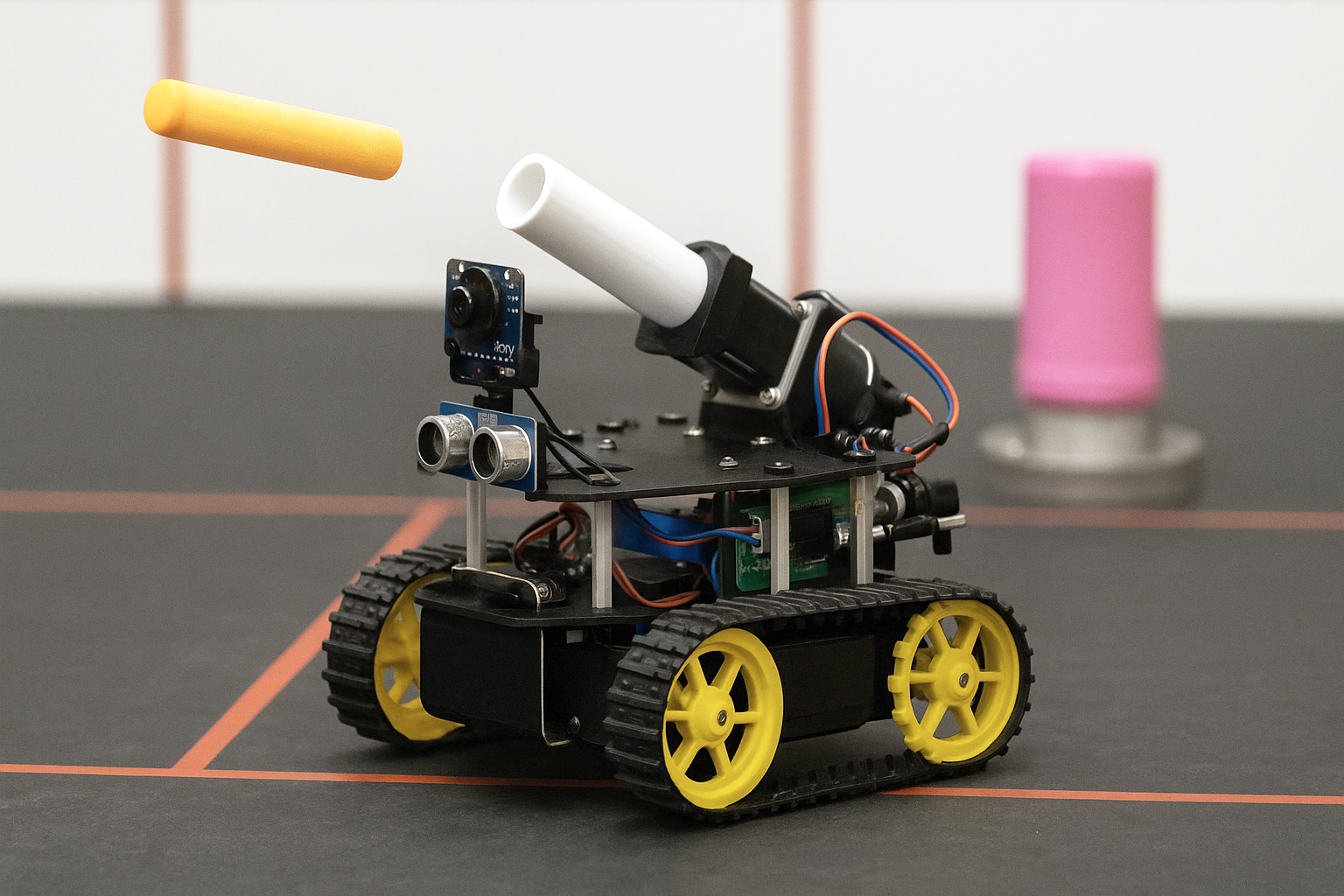

In my Mechatronics & Robotics course at CU Boulder, I co-developed an autonomous “tank” robot that navigates an obstacle-rich arena, identifies opponent bots via computer vision (detecting a pink coffee can), and fires Nerf projectiles to score points. I led the design of the 3D-printed chassis in SolidWorks, the development of motor-driver and sensor daughterboards in Altium, and the integration of motors, IR, sonar, and solenoid systems, all while adapting to a shift from custom PCB fabrication to streamlined wiring for timetable efficiency. The final robot successfully competed in an end-of-semester tournament, demonstrating robust sensor fusion, reliable actuation, and a compact mechanical-electrical integration strategy.

Team Mechaholics sought to meet this challenge and come out on top by creating a robot inspired by the iRobot Roomba. Through the use of motors, IR sensors, solenoids, a sonar sensor, batteries, PCBs and, the Mechaholics team successfully constructed a robotic tank capable of completing these tasks and competing in the end-of-semester tournament.

After finalizing the design of the chassis, I redirected my efforts toward the development of daughter boards that incorporate both the motor drivers and the sensor suite. I had initially designed these boards in Altium, and we planned on producing them through our own milling process to create the PCBs. However, after careful consideration, the team concluded that manually wiring the circuit boards would be a more efficient approach. This decision was driven by the desire to circumvent the lengthy manufacturing lead times associated with PCB production, thereby accelerating our project timeline and allowing for quicker integration and testing

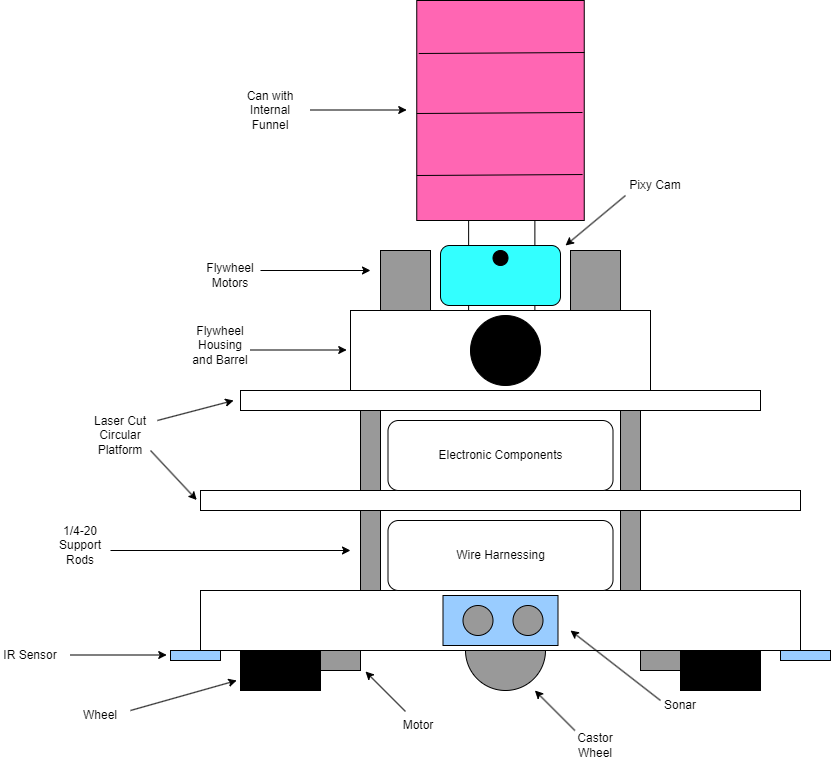

A key aspect of my involvement in this project was designing and manufacturing the chassis. The robot is built off of a 3D-printed chassis designed in SolidWorks that provides mounting locations for all electrical and mechanical components. The main base houses the wheels, stepper motors, and locations for IR sensors and radar. These mounting locations were implemented into the 3D-printed design itself to allow for tight packaging of the driving mechanism. Above the main chassis, several levels of laser-cut acrylic will support the accompanying electronics, firing mechanism, Pixy monitor, and coffee can target. These levels allowed for electronic components to be isolated and improve the overall packaging of the system.

Mechatronics was a challenging yet rewarding class that significantly enhanced my understanding of integrating coding and circuitry with mechanical components—an area often underrepresented in the mechanical engineering curriculum. While traditional courses provide some exposure to coding and circuits, they seldom offer enough depth to master these skills. This elective class, taken alongside the core curriculum, offers a broader and essential perspective on integrating these skills into complete projects. Its open-ended structure focused on team-based, hands-on work, fostering not only technical skills but also problem-solving abilities through real-world application. This approach, while sometimes frustrating, proved invaluable for learning and growth, making it one of the most beneficial courses for mechanical engineers preparing to enter the workforce.

Graduate Robotic Arm Project

Computer-Vision Control

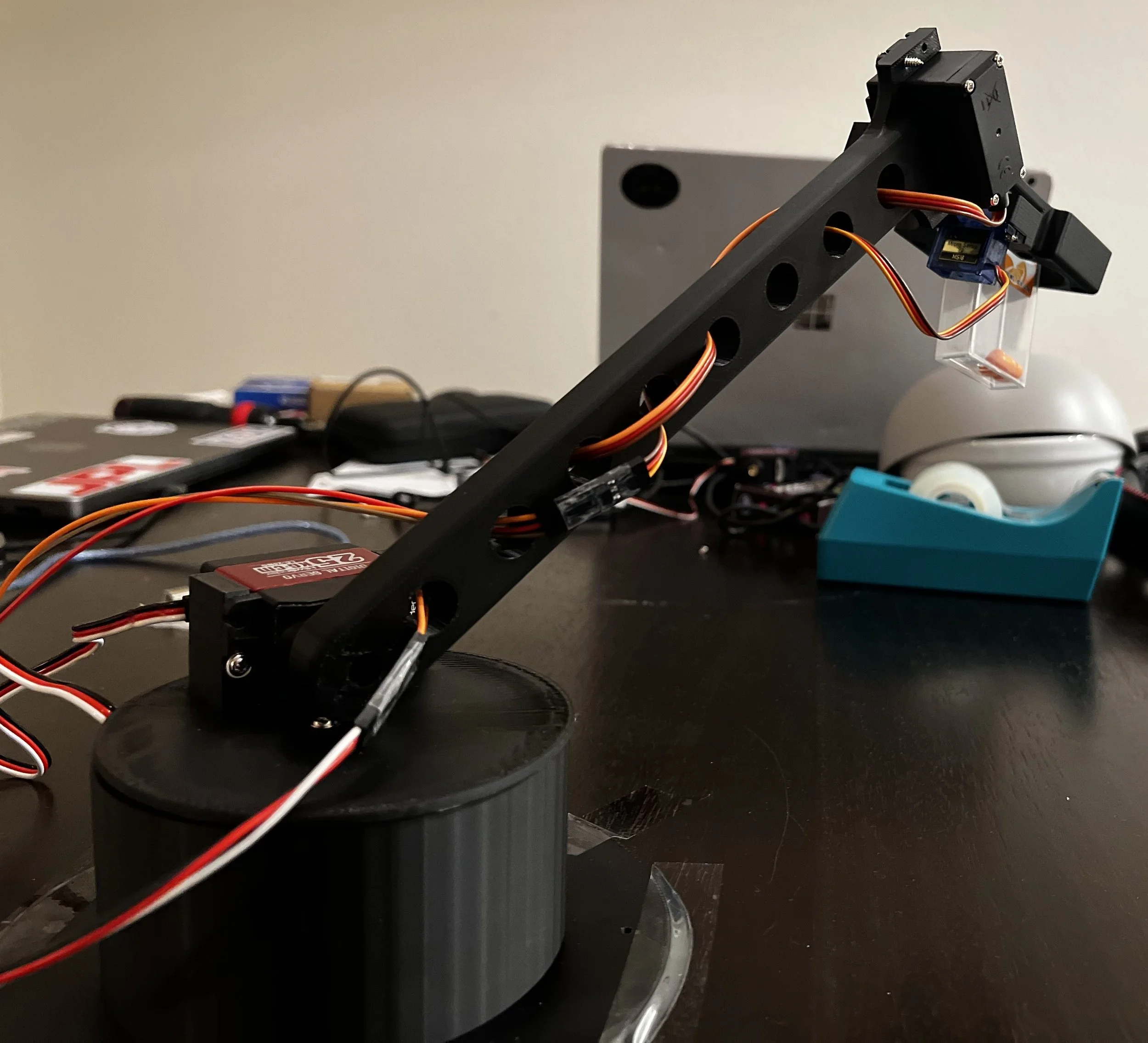

In this graduate-level Mechatronics II project, our team developed a vision-controlled robotic arm that interprets hand gestures via Google’s MediaPipe and executes real-time servo commands using a Raspberry Pi 4. The system integrates a 3D-printed mechanical arm with four degrees of freedom, an Adafruit PWM driver board, and computer vision software capable of interpreting finger positions to control each joint. We successfully demonstrated precision pick-and-place behavior using only finger-based inputs, culminating in a system that responds to live gestures without physical controls or buttons.

Mechanically, we modified our Mechatronics I robotic arm design for improved servo placement, wire routing, and actuation. The base housed a high-torque 25 kg servo for primary rotation, while additional servos controlled the shoulder, wrist, and claw subassemblies. The claw used a micro servo to drive one gripper against a fixed pad, optimizing simplicity and control. All components were printed in PLA using a Bambu Lab X1 Carbon, allowing for rapid design iteration. Electrically, we integrated the servos with an Adafruit ServoKit board, powered by a separate supply, and interfaced the control logic through the Raspberry Pi’s GPIO pins. A breadboard simplified prototyping, and the final circuit layout offered clean modular control across all axes.

On the software side, we developed a Python-based control program using MediaPipe to interpret finger landmarks. We implemented a system in which one or two fingers actuated the selected servo, while three or four fingers cycled between motors—making it possible to command multiple degrees of freedom using only hand signals. To minimize latency and improve accuracy, we limited gesture recognition to key finger joints and mapped them to decision logic. Despite the novelty, the system worked reliably in demo conditions: the robot was able to grab a small box and place it on a raised surface with reasonable accuracy. The main challenge was input lag and servo jitter, which we mitigated by refining the PWM driver logic and processing scope. The result is a fully functional CV-controlled robotic manipulator—responsive, flexible, and a solid platform for future gesture-based control development.

Foundational 3D-Printed Robotic Arm

For our initial Mechatronics project, we designed and built a 3D-printed robotic arm capable of rotating in three axes, grasping an object, and placing—or even throwing—it elsewhere. Using SolidWorks, we modeled five core components including the base, arm, and claw, and fabricated each using PLA filament on a Bambu Lab X1-Carbon printer. The robot was actuated with four servo motors of varying torque ratings, controlled by an Arduino Mega 2560 and breadboarded signal routing. Our final build successfully executed sequenced movements written in C++ using the Servo.h library, demonstrating reliable articulation and basic object interaction.

The project emphasized speed and simplicity in both mechanical and software design. Instead of complex multi-motor kinematics, we opted for a two-jaw claw driven by a single micro servo and a wrench-like arm structure that reduced material and print time through cheese-holing. Torque selection was critical: 25 kg servos handled heavy base and shoulder loads, while lighter 12 kg and micro units were used for wrist and grip actuation. On the software side, we structured all movement through sequenced for-loops, where each joint was mapped to relative angles and time-based execution—allowing for simple, repeatable motions. The success of this version laid the groundwork for our gesture-controlled upgrade in the subsequent semester, while highlighting essential lessons on scope management, component failure, and code structuring.